castshelf.com

AI-powered podcast show notes generator.

Working prototype (you’ll have to ask me for credentials)

Summary

Role: Product designer operating as solo PM + pseudo-engineer with AI tools

Goal: Teach myself modern AI tooling by shipping a real, testable product that could, in theory, stand as a tiny business.

I built Castshelf, a tool that takes a podcast recording and generates detailed show notes: topics discussed, people/books/products mentioned, and relevant links.

The core of this project was about:

Learning to prototype with AI beyond Figma

Treating AI models as building blocks in a product system

Making concrete product and technical tradeoffs (scope, stack, cost, testability)

Getting to a working, demo-able MVP, not a perfect architecture

I shipped a functioning prototype, cut down a hugely over-engineered initial design, worked on processing costs and reduced them by ~10x, and built the infrastructure needed for safe user testing — even though I stopped just short of real user interviews.

Why

Because I wanted to learn AI tools with a project that would be useful at least for myself.

I listen to a lot of podcasts, a lot of the time, I hear about a cool thing, and the show notes will not have a link to find the cool thing.

So I wanted to have an easy way to extract the valuable knowledge from the recordings. But I also wanted to make something that has at least some theoretical business potential.

There are two kinds of show notes: good detailed meticulous show notes with all the timestamps and links, and sparse 3 lines of episode synopsis.

I imagine that a lot of the people who don’t put together elaborate show notes would like to fix that, but don’t have the time/energy/resources for it. And I imagine some people who do put together detailed show notes would like it to be less of a chore.

There’s also some business considerations, good show notes are fuel for SEO and discoverability.

On the other hand some shows completely rely on word of mouth and don’t care about this sort of stuff. Before committing my time and tokens I decided to check if this is even a market.

Turns out, it is. There are products that offer a similar thing, and together with ChatGPT we talked through a potential business-case for this thing. But it’s ChatGPT, so who knows how robust this case actually is. But it’s robust enough to build an MVP to gauge the actual interest.

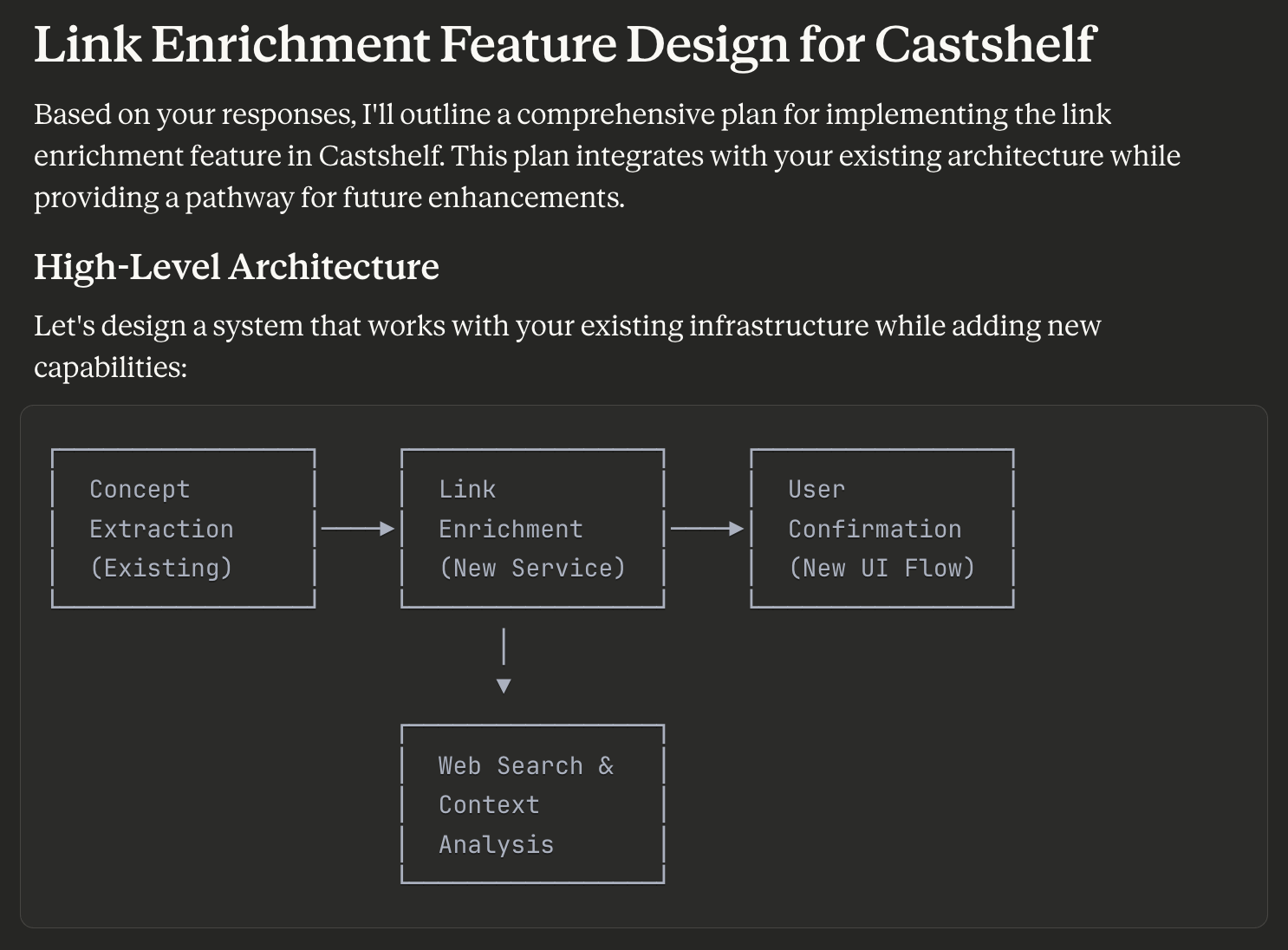

How does it work

Upload, analyze, get the show notes.

More detailed:

Upload — cloudfare bucket

Transcribe — OAI Whisper via web API

Concept analysis — ChatGPT/Claude via web API

Links search — Claude via my Google search ID

Encoded logic — Coded with Claude in Replit

First milestone: Demo-able MVP

Main challenges:

The right tools

The right scope

Not to quit

To be frank, this was not the very first stab at this problem I took, so I already knew the core flow I wanted to see.

The first version had a very elaborate DB design with extracted concepts and tracking topics across episodes and shows. Dropping it was an essential pivot.

However, it was my first attempt to realize this as a web application. I overestimated my skill and patience, and how much AI could actually help me. So I had to pivot from the initial stack I chose.

My initial idea was to focus on an implementation that would help me to get the most bang for my buck. Which meant that at every point I should avoid prepackaged and productized solutions, and go for the “low level” options.

Specifically: not use ChatGPT AI for transcribing audio to text, but use Cloudfare workers+Whisper to save on API costs; don’t use ChatGPT or Claude for analyzing the content, but use Cloudfare workers+Assembly to analyze the audio. And some other choices like that.

Rather quickly the combination of the pipeline’s and code’s complexity, my expertise and AI’s unruliness led me to abandoning this approach. I could stick with it. But my priority was demo-able MVP, not a full-stack developer badge. So I switched to Replit.

It’s a tool that abstract away a lot of infrastructural complexity, which proved to be essential when I realized that “testing grounds” will require some very specific features that would bury me had I stayed on my previous course.

Scope

I had to prioritize features useful for a potential user testing over the UI I actually dreamed of.

UI-wise everything that was not completely essential was deprioritized.

Cool previews, cool inline editing approaches, speaker recognition and overall transcript formatting, user pics. Basic decency in terms of looks was sacrificed as well.

Apart from being simple product common sense, with AI coding this is essential, because AI tools are guaranteed to get lost in complicated code bases.

So instead I focused on features that I considered the most essential for a potential user testing:

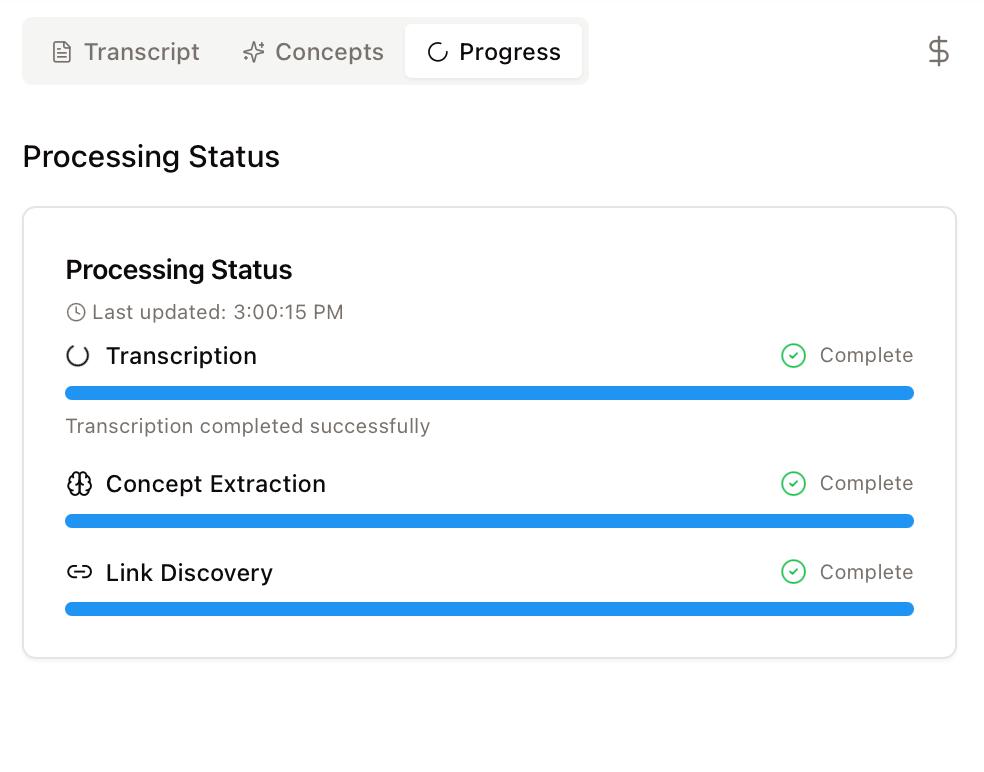

processing costs

users management

budget display

I needed to be able to let people I don’t really know into the system. I needed them to try it out and not get scared of the costs. I needed to have control over their budgets so they don’t drain my API balances with ChatGPT and Claude.

I am pleased to report, all of that is in place. I managed to reduce the processing costs up to 10 times. At the start the 44 seconds test sample I used cost 12–13 cents, after the optimization it dropped to 1 cent.

From record to record, the actual cost varies based on the number of links etc.

I also have an admin panel that helps me to create test users, assign, and view budgets.

And the users have full transparency into the spending. So when they try out the tool they can get an idea on how much it would cost to operate for them.

Testing that didn’t happen (yet)

Two people actually involved in the podcast production have expressed their interest. But I didn’t manage to push myself to conduct any actual tests. Probably because “well, I solved it, didn’t I?”

But some day…